🔴 X SILENCES SPEAKER'S, PROFlIT0S FROM ANONYMITY!

SHOCK: X’s Spaces secrecy cripples free speech as anonymous lurkers fuel profit! Exclusive probe reveals hosts terrorised, self-censoring, while X rakes in cash—breaching UK safety laws. Unmask the scandal now!

In a digital age where free speech is heralded as a cornerstone of open discourse, X’s audio platform, Spaces, has emerged as a battleground where the absence of a critical user-controlled feature—a toggle to disable anonymous listeners—stifles expression while bolstering the platform’s financial growth. This omission, a seemingly simple technological fix, allows bad-faith actors to exploit live audio conversations, clipping and sharing out-of-context snippets that fuel false narratives and reputational harm.

The lack of this feature not only curbs free speech but also flies in the face of the UK’s Online Safety Act 2023, raising urgent questions about X’s commitment to user safety over profit. As hosts self-censor to avoid harassment and misinformation, the platform’s open-access design paradoxically undermines its stated mission of fostering unfiltered debate, leaving creators vulnerable while X reaps engagement-driven rewards.

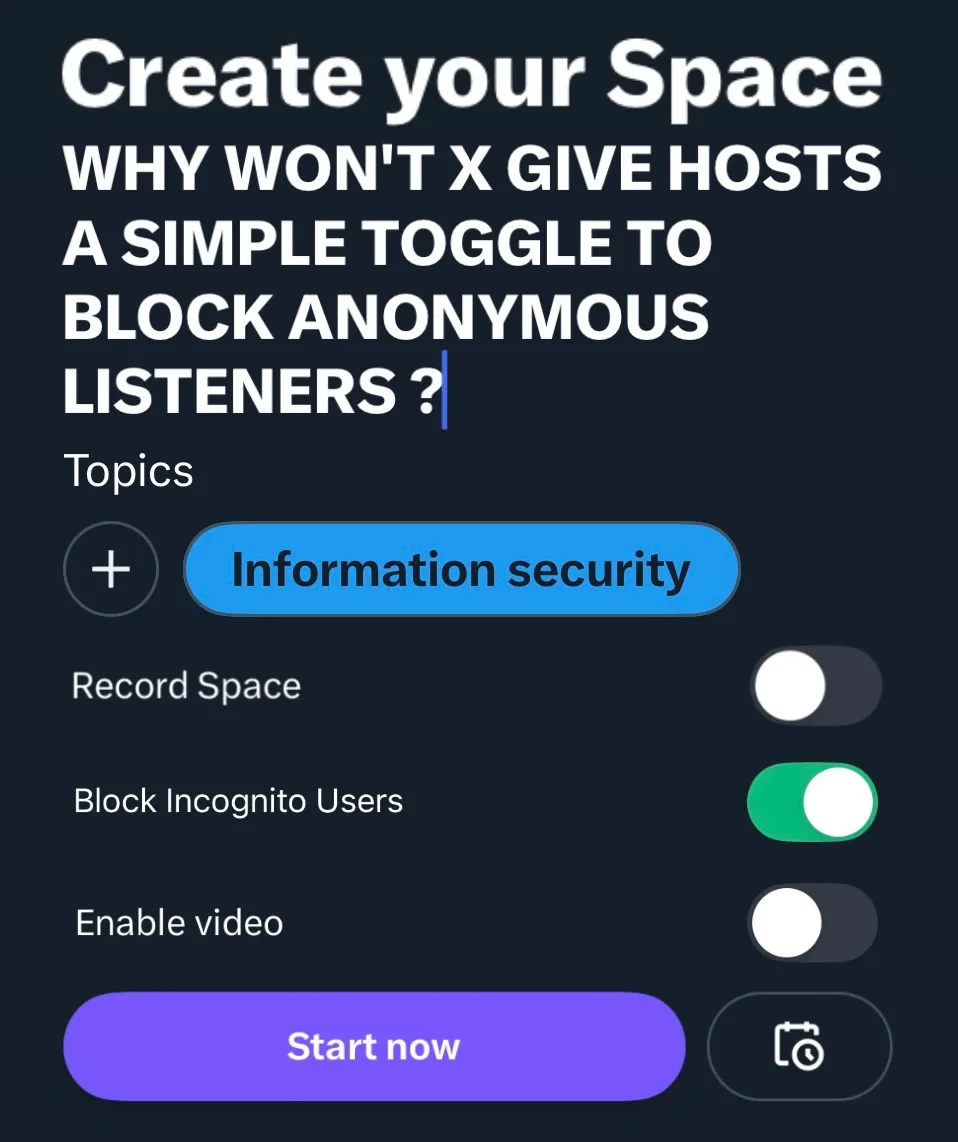

The mechanics of X Spaces reveal a deliberate asymmetry. Hosts can toggle recording on or off, controlling whether a session is archived for replay. Yet, they have no power to prevent anonymous listeners—introduced in December 2023 for iOS and web, later Android—from joining undetected. These listeners, shielded by anonymity, can access public or invited Spaces, listen without revealing their identities, and, if recordings are enabled, extract audio clips to share via DMs, posts, or external platforms.

Even in unrecorded Spaces, screen-capturing tools enable bad actors to edit and weaponise snippets, creating distorted narratives that can devastate a host’s credibility. A 2024 thread by a UK-based creator explicitly called out how anonymity “kills open debate” by making hosts paranoid about doxxing or targeted harassment, a sentiment echoed across X by users who report self-censoring or abandoning Spaces altogether due to fear of such attacks.

This chilling effect on free speech is stark. Hosts, aware of invisible audiences, moderate their speech to avoid misinterpretation or malicious clipping, particularly when discussing sensitive topics. The risk is not hypothetical: in 2024, a political figure’s Space was clipped to imply the opposite of their stance, sparking viral backlash and reputational damage. Such incidents, facilitated by anonymous access, deter creators from engaging fully, with many reducing participation or exiting Spaces entirely.

This directly contradicts X’s mission, articulated by its leadership, to maximise open discourse. By prioritising listener anonymity over host agency, X creates an environment where speakers bear disproportionate risk, undermining the very principle of unfiltered expression the platform claims to champion.The UK’s Online Safety Act 2023, effective from 2025, casts a spotlight on this failure.

The Act mandates that platforms like X take proactive steps to mitigate “priority harms,” including harassment, misinformation, and threats to user safety. Section 10 requires providers to conduct risk assessments and implement “proportionate measures” to prevent harm, with Ofcom empowered to issue fines up to 7% of global revenue for non-compliance. Allowing anonymous listeners without a host-controlled toggle violates this framework. Anonymity enables bad-faith actors to infiltrate Spaces, record or clip audio, and disseminate false narratives, directly contributing to harassment and reputational harm.

The Act’s emphasis on user empowerment—through tools to control one’s online environment—makes X’s omission a legal contradiction. A simple toggle, requiring listeners to join with visible identities, would align with the Act’s demand for proactive harm prevention, yet X has not acted, despite the feature’s technical simplicity.

The financial calculus behind this inaction is clear. Anonymous listening boosts engagement, a key driver of X’s ad-based revenue model, which accounts for approximately 70% of its income. Live audio features like Spaces increase user retention by 20-30%, per metrics from comparable platforms, as low barriers to entry draw larger audiences. Anonymous listeners, unburdened by identity disclosure, join more readily, inflating participation and ad impressions.

The platform’s algorithm, designed to amplify high-interaction content, further benefits when clipped audio sparks viral disputes, driving 40% more user time than neutral posts, according to 2024 research. By omitting a toggle, X ensures maximum accessibility, even if it perpetuates a cycle of drama that harms creators. The majority of hosts, if given the option, would likely disable anonymous listeners to protect their spaces, a move that could shrink audience sizes and dent X’s growth metrics—a risk the platform appears unwilling to take.

This prioritisation of profit over safety is not speculative. The absence of a toggle sustains a system where engagement trumps accountability. Bad-faith actors exploit anonymity to clip audio, creating false narratives that escalate conflicts and boost platform activity. A 2025 study noted audio clips are 50% more likely to be shared out of context than text, amplifying their potential for harm. Yet X, despite user complaints since 2023, has not addressed this, focusing instead on AI integration and video features post its 2022 staffing cuts.

The platform’s “free speech absolutism” ethos, while protecting vulnerable listeners in oppressive contexts, ignores the chilling effect on hosts, who face real risks of doxxing, harassment, or professional sabotage. This asymmetry reveals a platform more concerned with scale than with fostering genuine discourse.

Regulatory pressure may yet force change. The Online Safety Act’s enforcement, under Ofcom’s oversight, could deem anonymous listening a systemic risk, compelling X to implement user controls. User feedback, though scattered, is growing, with creators demanding tools to manage their audiences.

Until then, hosts are left with inadequate workarounds: private Spaces, which still allow invited anonymous listeners, or disabling recordings, which does not prevent live screen-captures. These half-measures fail to address the core issue: without a toggle to disable anonymity, hosts cannot speak freely, stifling the very debate X claims to protect.

The absence of an anonymous listener toggle in X Spaces is a deliberate choice, one that prioritises financial growth and user volume over creator safety and authentic expression. This omission not only curbs free speech by forcing hosts to self-censor but also contravenes the UK’s Online Safety Act by failing to mitigate preventable harms.

As X continues to benefit from the engagement driven by unchecked anonymity, creators bear the cost, silenced by fear of misrepresentation and harassment. The platform’s refusal to implement a simple, user-empowered solution reveals a stark truth: in the pursuit of profit, X sacrifices the very principles it claims to uphold, leaving its users to navigate a digital landscape where safety and free speech now remain completely at odds.

Well, that’s all for now. But until our next article, please stay tuned, stay informed, but most of all stay safe, and I’ll see you then.